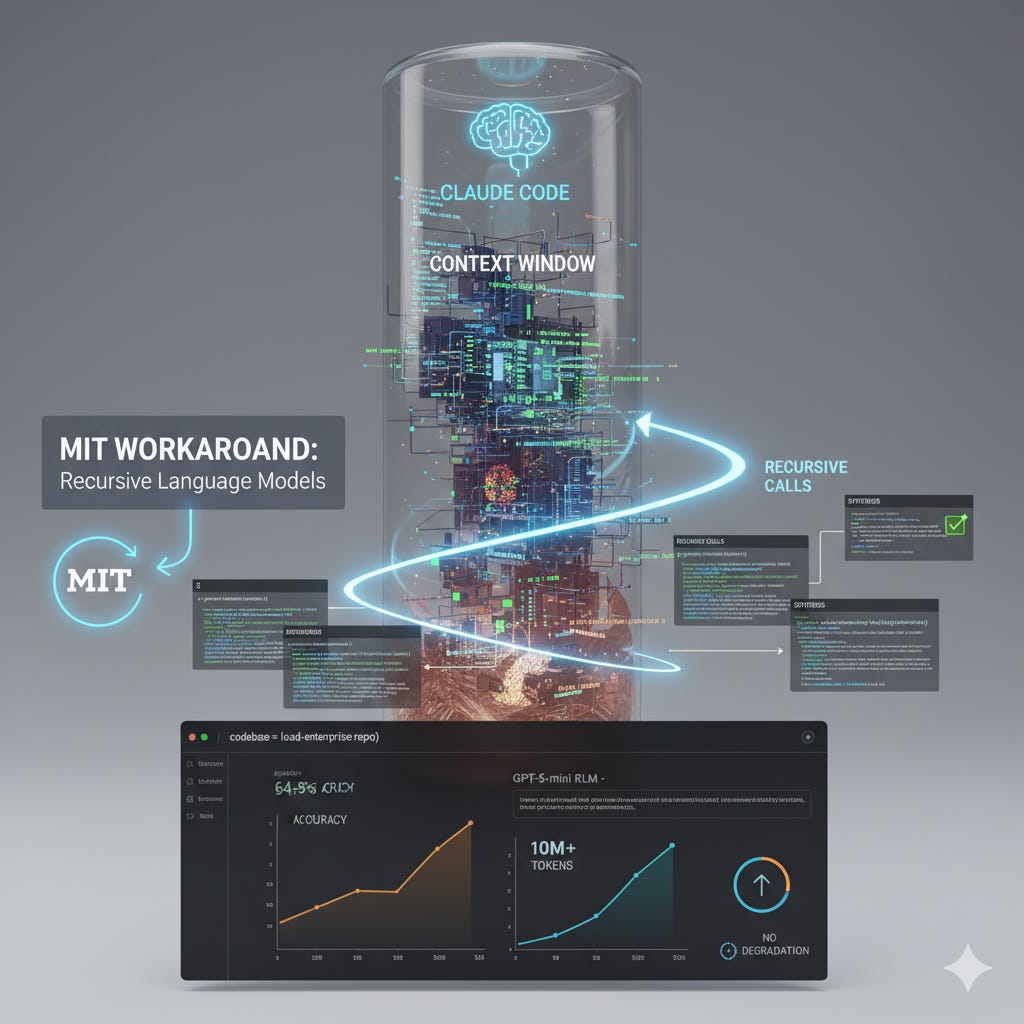

Context rot is real. MIT just found the workaround.

Recursive Language Models let AI explore code iteratively instead of loading everything at once

Your codebase is 180,000 lines.

Claude Code’s context window is 200,000 tokens (roughly 150,000 words).

Seems fine, right?

Wrong.

By token 50,000, Claude starts forgetting your architecture decisions. By token 100,000, it’s rewriting patterns you established at the start. By token 150,000, it’s essentially working blind.

MIT researchers just published early results on a potential solution: Recursive Language Models that treat your entire codebase as a Python variable, recursively exploring it without ever loading it all into context.

GPT-5-mini using this approach achieved over double the performance of GPT-5 (64.9% vs 30.3%) on long-context tasks, maintained similar costs on average, and handled 10M+ tokens without degrading.

The research blog dropped in October 2025. Here’s what it means for anyone working with large codebases.

The Enterprise Codebase Reality

180,000 lines isn’t unusual. That’s a mid-sized SaaS application. Maybe 400 files across frontend, backend, database migrations, tests, configs.

If you could somehow load all of it into context, you’re looking at roughly 240,000 tokens. You can’t. And even if you could, you wouldn’t want to.

Your team’s architectural decisions are scattered everywhere. The auth pattern lives in middleware/auth.js. The database connection logic is in db/connection.py. The error handling strategy got documented in a comment in utils/errors.ts six months ago.

Claude Code can’t hold it all. So it either:

Guesses based on partial context (dangerous)

Asks you to explain things it should already know (tedious)

Uses RAG which requires setup and maintenance (overhead)

None of these are great options.

Why Current Solutions Fall Short

RAG and vector search:

You need an embedding pipeline. Re-index on every commit or risk stale results. And vector similarity misses architectural patterns that span multiple files. It can’t reason about relationships between distant pieces of code.

I’ve built RAG systems. They work for documentation search. For code? The maintenance burden is real.

Bigger context windows:

Cost scales linearly or worse. Gemini 2.5 Pro has a 1 million token context window. Great. Processing that costs $70+ per request and takes forever.

And context rot still happens. Researchers at Google found the “lost in the middle” phenomenon. The model knows the information is somewhere in its context but can’t retrieve it during generation.

Compression:

You lose critical details. Can’t recover information once it’s compressed. You’re forced to choose what to forget before you even know what you’ll need.

All of these approaches assume the same thing: you need to fit your codebase into context.

What if you don’t?

Stop Cramming, Start Exploring

The RLM approach from MIT is different.

Store your codebase as a variable in a Python REPL environment. Claude doesn’t load it all at once. Instead, it explores recursively:

grepfor patterns across filesRead specific files when needed

Call itself recursively on subsets

Build understanding iteratively

Instead of processing 200,000 tokens in one massive context, it processes maybe 20 chunks of 10,000 tokens. Only loads what it needs for each recursive call.

The root language model (depth=0) never sees your full codebase. It orchestrates. It decides what to look at next. It spawns recursive calls (depth=1) to analyze specific pieces.

Each recursive call is focused. Small context. Clear task. Then it returns results to the root model, which synthesizes everything.

This is how the researchers got GPT-5-mini to outperform GPT-5. Not by making the model smarter. By changing how it interacts with large amounts of information.

The techniques below are for paid subscribers only. You’ll learn how Recursive Language Models work, what the MIT benchmarks actually tested, how this could apply to your Claude Code workflow, and the critical limitations researchers found (including the queries that cost 10x more).

Keep reading with a 7-day free trial

Subscribe to The AI Architect to keep reading this post and get 7 days of free access to the full post archives.